In today's digital age, online customer reviews and comments have become a key factor influencing purchasing decisions.

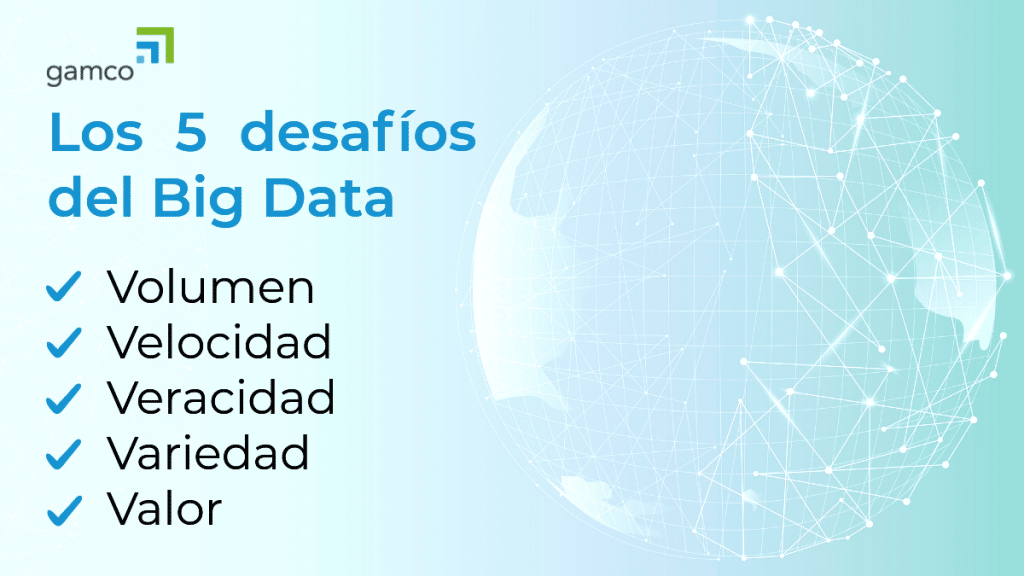

Read More »5 challenges of Big Data can be highlighted which are defined as V (volume, velocity, veracity, variety and value). R. Narasimhan discussed 3V as volume, variety and velocity, while. F. J. Ohlhorst studied Big Data considering 4V, which are volume, variety, velocity and veracity. Alexandra L'heureux also described the challenges faced by Machine Learning algorithms considering 4V and not taking into account value as an essential feature but as one of the products of the data processing task [Man11].

This article considers value as one of the important qualities of Big Data because it affects privacy and other aspects of data. Therefore, in this article will discuss the Big Data challenges faced by Machine Learning algorithms based on 5V, which are volume, velocity, variety, veracity and value..

Qiu et al. [QWD+16] provided a study of Machine Learning as it relates to the Big Data focused primarily on signal processing by identifying several of the most important concerns related to data scale, velocity, heterogeneity, incompleteness and uncertainty. Al-Jarrah et al. [AJYM+15] evaluated analytical features of Machine Learning for large-scale system efficiency.

► You may be interested in: Big Data applied to business

Various Machine Learning techniques, more specifically deep learning techniques, have also been studied extensively, focusing on the various problems faced due to Big Data. Uthayasankar Sivarajah studied the Big Data challenges proposed and addressed by organizations and the analytical methods employed to overcome them, but did not specify Machine Learning. Gandoni and Haider classified the challenges faced by Big Data due to its non-Machine Learning dimensions.

Big Data is often classified as a large amount of data, huge size in terms of storage, and massive scale. According to Han Hu et al. [HWCL14] The distinctiveness of big data makes it difficult to design a scalable big data system. The variety of data and the heterogeneous nature of the data makes collecting and integrating data from disparate distributed systems in a scalable manner often very difficult.

One of the challenges of Big Data is the large volumes of Big Data represent a challenge for long-established machine learning algorithms to be trained on a single processor and storage. Instead, parallel computing in collaboration with distributed frameworks is preferred. To realize large-scale data processing in parallel and distributed architecture, the alternating direction multiplier method (ADMM) has been proposed [ZBB14], [LFJ13] which provides a computational platform for building scalable, distributed and convex optimization algorithms.

Volume affects processing performance by increasing computational complexity. Some machine learning algorithms such as SVM (Support Vector Machines), PCA (Principal Component Analysis) and logistic regression show an exponential increase in computational time with increasing data size. Machine learning algorithms usually assume that the data to be processed can be captured entirely in memory, but the volume raises the need and "curseThe "modularity" of modularity in this class of algorithms.

Big Data is typically characterized as data with large dimension or variable space, which leads to another major problem facing machine learning algorithms known as "Curse of Dimensionality". Most machine learning algorithms exhibit polynomial time complexity based on the number of dimensions.

To provide scalability to Machine Learning algorithms, cloud computing is considered to improve the computational and storage capacity needed to handle large-scale data analysis. In this perspective [LBG+12]a cloud-based Machine Learning framework was proposed, known as GraphLab distributed.

Data semantics is another Big Data challenge that Machine Learning algorithms face when processing large amounts of data. Most of the data collected comes from various sources and comes with different semantics. This data should not be stored as strings of data bits, but requires semantic indexing.

Big Data is composed of different data with different formats coming from sources that can be structured, semi-structured and completely unstructured. This fusion of data from different sources of large dimension comes with a great challenge to deal with a high degree of complexity, data integration and data reduction.

The location of the data is the fundamental challenge faced by the Machine Learning algorithm, as it assumes that all the data is in memory [KGDL13]or on the same disk file, which is often impossible in the case of Big Data. This led to the introduction of an approach in which the computation moves to the location of the data as opposed to moving the data to the location of the computation.

Dimensionality reduction is an efficient method for handling high dimensional data. Feature selection or feature extraction is the most commonly used approach to reduce data dimensions. For example, Sun et al. [STG10] performed high-dimensional data analysis using a feature selection algorithm based on local learning. All of these algorithms perform well in high dimension, but efficiency and accuracy decrease dramatically when used in parallel distributed architecture.

High dimensionality in Big Data often results in serious problems such as missing values, measurement errors, noisy data, and outliers, as mentioned by et al. [FHL14]. They considered noisy data to be one of the main challenges in analyzing Big Data, Swan. [Swa13] also suggested that, due to the noise of Big Data, it may be difficult to provide meaningful results.

Big Data often consists of fast streaming data that arrives constantly, although there may also be data that arrives at intervals that are not real time. Machine Learning algorithms work on an assumption that the entire data set is assumed to be available at any given time for processing, but this often fails in terms of Big Data. Therefore, incremental learning is considered for algorithms to adapt new information.

The online learning has become one of the promising techniques to handle the Big Data velocity problem, as it does not work on the whole dataset at once, but learns an instance at a given time by implementing a sequential learning strategy, but suffer from catastrophic interference.

With the rapid applicability of real-time processing in the current era with the proliferation of IoT, sensors such as RFID and mobile devices, many streaming systems such as Apache Spark, Amazon Kinesis, Yahoo'S4, etc. have emerged. These frameworks show success in real-time processing, but lack refined Machine Learning, although it provides features for Machine Learning integration using external tools or languages.

Veracity implies the quality and provenance of Big Data. According to IBM, Big Data sources are inherently unreliable, making it difficult to obtain quality data. Big Data often contains information that is erroneous, inaccurate, missing values, dirty and noisy by nature, as it is collected from different data sources and therefore tends to be uncertain and incomplete, becoming a problem to handle with Machine Learning algorithms.

Some of the examples of Big Data sources include social networks, crowdsourcing, weather data collected from sensors or economic data. But this data is often imprecise, uncertain and inaccurate, as it lacks objectivity, which makes the learning task difficult for a Machine Learning algorithm.

Learning from this deficient and incomplete data is a challenging task, as most existing Machine Learning algorithms cannot be applied in a straightforward manner. Chen and Lin [wCL14] studied the application of deep learning methods to deal with noisy and incomplete data.

Again, Machine Learning methods often do not work efficiently when applied to real-time data transmission. This high dimensional data while performing learning with complex architectures is a difficult optimization task.

The value that the data can generate once processed can be considered the most important characteristic. This aspect is what makes the information contained therein useful, and gives meaning to the development of solutions to the problems to be addressed. Knowledge discovery in databases and data mining approaches can provide substantial solutions. [TLCY14], [WZWD14], [FPSS96]. but face multiple challenges due to the dimensions of big data that severely affect the value dimension associated with big data.

Although many authors have provided solutions to Big Data problems using Machine Learning as in [WZWD14]Wu et al. exemplified and proposed Machine Learning and data mining algorithms based on Big Data processing.

In many real-world applications, the value or usefulness of processing results is often subject to a time limit, i.e., if the result is not generated within a certain period of time, it loses its importance and relevance for e.g., predicting stock market value and agent-based buy and sell systems.

In addition, the value of the processing results is highly dependent on the actuality of the data to be processed. The non-stationary nature of the data is another challenge faced by Machine Learning algorithms that are not designed to work with on-the-fly or streaming data.

[AJYM+15] Omar Y. Al-Jarrah, Paul D. Yoo, Sami Muhaidat, George K. Karagiannidis, and Kamal Taha. Efficient machine learning for big data: A review. Big Data Research, 2(3):87-93, 2015. Big Data, Analytics, and High-Performance Computing.

[FHL14] Jianqing Fan, Fang Han, and Han Liu. Challenges of big data analysis. National science review, 1(2):293-314, Jun 2014. 25419469[pmid].

[FPSS96] Usama M. Fayyad, Gregory Piatetsky-Shapiro, and Padhraic Smyth. From data mining to knowledge discovery in databases. AI Mag., 17:37-54, 1996.

[HWCL14] HanHu,Yonggang Wen, Tat-Seng Chua, and Xuelong Li. Toward scalable systems for big data analytics: A technology tutorial. IEEE Access, 2:652-687, 2014.

[JGL+14] H. V. Jagadish, Johannes Gehrke, Alexandros Labrinidis, Yannis Papakonstantinou, Jignesh M. Patel, Raghu Ramakrishnan, and Cyrus Shahabi. Big data and its technical challenges. Commun. ACM, 57(7):86-94, Jul 2014.

[KGDL13] K. Kumar, Jonathan Gluck, Amol Deshpande, and Jimmy Lin. Hone: "scaling down "hadoop on sharedmemory systems. Proceedings of the VLDB Endowment, 6:1354-1357, 08 2013.

[Kia03] Melody Y. Kiang. A comparative assessment of classification methods. Decision Support Systems, 35(4):441-454, 2003. [LBG+12] Yucheng Low, Danny Bickson, Joseph Gonzalez, Carlos Guestrin, Aapo Kyrola, and Joseph M. Hellerstein. Distributed graphlab: A framework for machine learning and data mining in the cloud. Proc. VLDB Endow, 5(8):716-727, Apr 2012.

[LFJ13] Fu Lin, Makan Fardad, and Mihailo R. Jovanovi'c. Design of optimal sparse feedback gains via the alternating direction method of multipliers. IEEE Transactions on Automatic Control, 58(9):2426-2431, 2013.

[Man11] J. Manyika. Big data: The next frontier for innovation, competition, and productivity. 2011.

[QWD+16] Junfei Qiu, Qihui Wu, Guoru Ding, Yuhua Xu, and Shuo Feng. A survey of machine learning for big data processing. EURASIP Journal on Advances in Signal Processing, 2016, 05 2016.

[SKIW17] Uthayasankar Sivarajah, Muhammad Mustafa Kamal, Zahir Irani, and Vishanth Weerakkody. Critical analysis of big data challenges and analytical methods. Journal of Business Research, 70:263-286, 2017. Page 6 of 7 Research Paper (0-0-3)- rev.1.

[STG10] Yijun Sun, Sinisa Todorovic, and Steve Goodison. Local-learning-based feature selection for highdimensional data analysis. IEEE transactions on pattern analysis and machine intelligence, 32(9):16101626, Sep 2010. 20634556[pmid].

[Swa13] Melanie Swan. The quantified self: Fundamental disruption in big data science and biological discovery. Big Data, 1:85-99, 06 2013.

[TLCY14] Chun-Wei Tsai, Chin-Feng Lai, Ming-Chao Chiang, and Laurence T. Yang. Data mining for internet of things: A survey. IEEE Communications Surveys & Tutorials, 16(1):77-97, 2014.

[wCL14] Xue wen Chen and Xiaotong Lin. Big data deep learning: Challenges and perspectives. IEEE Access, 2:514-525, 2014.

[WZWD14] Xindong Wu, Xingquan Zhu, Gong-Qing Wu, and Wei Ding. Data mining with big data. IEEE Transactions on Knowledge and Data Engineering, 26(1):97-107, 2014.

[ZBB14] Nadim Zgheib, Thomas Bonometti, and S. Balachandar. Long-lasting effect of initial configuration in gravitational spreading of material fronts. Theoretical and Computational Fluid Dynamics, 28(5):521529, 2014.

In today's digital age, online customer reviews and comments have become a key factor influencing purchasing decisions.

Read More »After the revolutions led by coal, electricity, and then electronics, society is now witnessing a fourth revolution in the energy sector.

Read More »Industry 4.0 is the name given to the fourth industrial revolution, which is characterized by the inclusion of advanced technologies in production processes.

Read More »The world is experiencing exponential growth in data generation on an ever-increasing scale. According to IDC (International Data Corp.

Read More »